Developers

Parallelizing Stellar Core: The First Step Toward 5000 TPS

Author

Marta Lokhava

Publishing date

This blog is part of our ongoing series on the progress made on the Stellar Development Foundation's 2025 roadmap and the key initiatives we’re driving to improve usability, expand adoption, and strengthen the Stellar Ecosystem. This post builds on the plans introduced in The Road to 5000 TPS: Scaling Stellar in 2025.

At the Stellar Development Foundation (SDF), our focus is on supporting real-world use cases and meeting the evolving demands of the Stellar ecosystem. Historically, validators — who determine network configurations — agreed on ledger limits based on observed usage, striking a balance between reasonable ledger acceptance and fair fees for network participants.

With the introduction of Soroban, we've seen new users and emerging use cases. Over the past few months, Soroban usage has grown significantly, and it is likely validators will decide to adjust network limits in response. However, raising these limits is no simple task—we must ensure the network stays healthy under increased load. Looking ahead, our short to medium term goals are to achieve theoretical 5000 transactions per second and reduce block time from the current 5 seconds to 2.5 seconds.

To support this evolution, SDF began a series of major upgrades to Stellar Core last year aimed at enhancing scalability and paving the way for higher throughput and lower block times. We designed these changes to take advantage of spare CPU capacity already present on most modern computers. The best part: no hardware upgrades are needed—Stellar Core simply makes smarter use of existing CPU cores.

In a series of upcoming blog posts, we’ll walk through the performance improvements – some already implemented and some planned – and how they help prepare Stellar for the future.

First, let us articulate the vision for scalable Stellar Core. What do we want Stellar Core to look like once all the planned changes are complete?

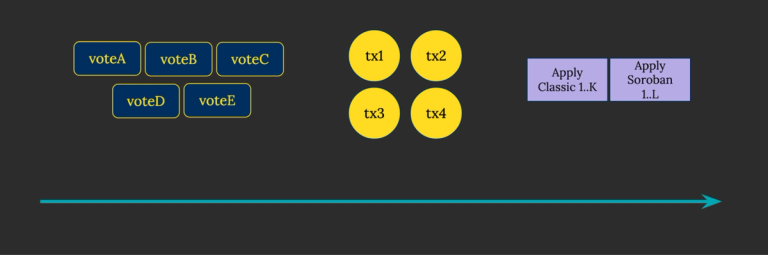

Conceptually, Stellar Core's work can be grouped into three streams of work:

- Disseminating new transactions: Network entry points such as Horizon and Stellar RPC continuously submit new transactions to the Stellar network. Every Stellar Core that receives a new transaction helps disseminate it by validating and sending the transaction to other Stellar Cores it knows about. Eventually, all Stellar Cores on the network receive the transaction.

- Agreeing on a transaction set to confirm: the Stellar Consensus Protocol (SCP) requires validators to exchange messages with other validators they trust. This stream of work involves validator voting itself, as well as help disseminating votes of other validators.

- Executing the confirmed transaction set: when the network agrees on a block via the Stellar Consensus Protocol, Stellar Core applies the block to the database, at which point we consider the transactions confirmed.

If we were to visualize these three streams, it would look like Figure 1a, with the blue arrow being the main thread.

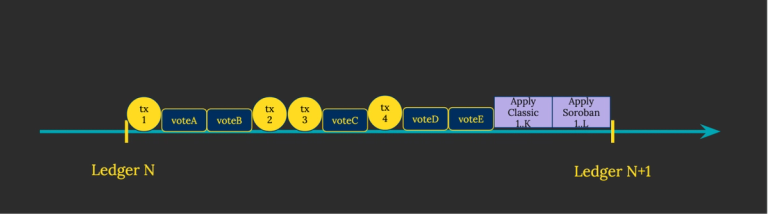

Prior to v22.1.0, Stellar Core executed all three streams of work on the main thread. Figure 1b gives an idea of how this work may be executed on the main thread. It can get busy!

A couple of important observations from these diagrams:

- Transactions (yellow) and votes (blue) compete for the main thread time, therefore introducing unnecessary delays for both streams.

- Block execution (purple) typically takes a non-trivial amount of time (think hundreds of milliseconds, even seconds – a lot given that our goal is to reduce end-to-end latency down to 2.5 seconds!). Stalling the main thread for such long periods of time introduces an even worse latency delay for the other two streams.

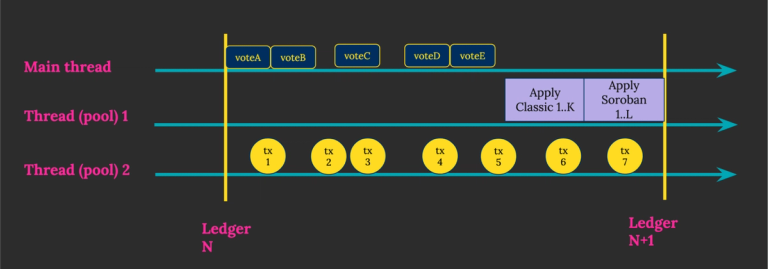

To remedy this situation, we first made two important changes (illustrated in Figure 2):

- Dedicate a separate thread/thread pool to processing of transactions, thereby removing CPU competition between consensus and transaction processing.

- Dedicate a separate thread/thread pool to execution of blocks, allowing the main thread to focus purely on the consensus flow without block execution stalling consensus.

Note that there are still some dependencies with this new flow: specifically, consensus and block execution are still sequential. To achieve high throughputs and low block times, this is not ideal for two reasons:

- Our minimum block time depends on the sum of consensus and execution latencies

Under normal conditions, the main thread performing the Stellar Consensus Protocol is essentially idle while a separate thread executes the most recent block. Similarly, while validators run the Stellar Consensus Protocol to agree on a block, their CPUs are mostly idle, not executing any new blocks. Of course, validators still perform some CPU work while running SCP (such as processing votes, verifying signatures of votes, etc), but the amount is minuscule compared to CPU-heavy execution.

The Endgame: Pipelining

Enter the endgame – true pipelining of voting and block execution, see Figure 3.

Initially proposed by SDF's CTO, Nico Barry, this design presents several interesting properties:

- Pipelined consensus and execution:

- While the network is applying block N, it is simultaneously voting on block N+1. By the time execution of N completes, block N+1 is ready to apply.

- This architecture keeps each major component continuously active: the execution thread pool is always applying, the consensus thread is always voting, and the transaction dissemination thread pool is always broadcasting.

- This opens the door for lower block times, as now the minimum block time is max(consensus latency, execution latency), a significant improvement over previous sum(consensus latency, execution latency).

- We’ve designed blocks for ledgers N and N+1 to be conflict free, helping ensure that blocks agreed upon through consensus can be safely validated. The design mitigates the risk of denial-of-service (DoS) attacks in which malicious actors attempt to introduce blocks where transactions in an earlier block could invalidate those in a subsequent one—potentially reducing throughput to zero.

So how do we achieve this vision? The rest of this blog post will detail the first set of changes we made and enabled in Stellar Core v22.1.0 to support parallel dissemination of transactions. Subsequent blog posts will focus on the new experimental parallel execution feature, and changes planned to achieve the vision described above.

Part I: Isolating Dissemination

Transaction dissemination is, logically, a fairly isolated component, e.g., consensus and execution do not directly depend on it. During dissemination, Stellar Core performs a significant amount of expensive cryptographic work, including signature verification, hashing, custom authentication, and processing incoming and outgoing bytes. This work is CPU-intensive but decoupled from the application’s business logic.

Because these workloads are both partitioned and independent, they are well-suited to parallelization. That’s why we tackled this area first. Partitioned data sets eliminate concerns around data races and deadlocks, allowing us to scale performance more safely and efficiently.

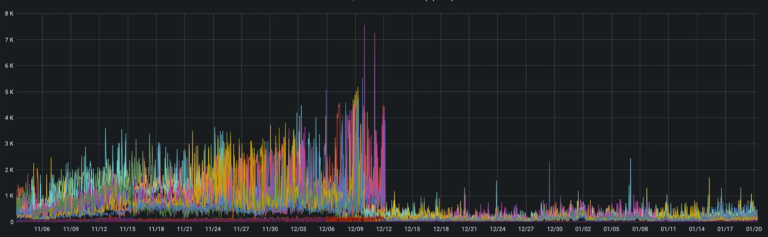

Beyond the cryptographic workload, Stellar Core now also performs traffic deduplication in the background—something made possible by its new parallel infrastructure. This removes most of the burden from the main thread and contributes to smoother overall performance, as observed in Figure 4.

Try it out!

To enable parallel processing on your Stellar Core, you’d need to configure a special new flag, `BACKGROUND_OVERLAY_PROCESSING`. As of Stellar Core v22.1.0, BACKGROUND_OVERLAY_PROCESSING is already on by default, meaning you can automatically enjoy the benefits of parallelization.

Deploying v22.1.0 yielded a sharp drop in main thread pressure – in other words, the amount of work main thread has in its backlog at any given time has gone down significantly. Figure 4 illustrates the drop, as observed on SDF’s validators as well as Horizon instances.

Starting with v22.3.0, Stellar Core will take full advantage of background transaction processing by performing signature verification in the background (to do so, toggle the new flag EXPERIMENTAL_BACKGROUND_TX_SIG_VERIFICATION).

Part II: Isolating Execution

This is just the beginning of the journey toward a fully parallelized Stellar Core. In the next installment of this blog series, we’ll dive into the second major improvement: isolating execution. Stay tuned!