Blog Article

How to validate blockchain code with Stellar supercluster

Author

Veronica Irwin

Publishing date

Stellar

Supercluster

Why do we need to test blockchain upgrades?

Stellar is so useful because it’s dependable. World aid organizations, major international companies, and migrants sending remittances don’t really get down with ‘move fast, break things’ — they need to be able to send and receive remittances, aid, and other types of payments reliably and with ease.

That means that developers working on Stellar Core can’t just write code and push it to pubnet. Instead, code must be tested before it runs on the public network to make sure it actually works. Upgrades must be seamless, so users face no interruptions in transacting on the network.

Stellar already has two networks separate from the public network (pubnet) that can be used for some experimentation: testnet and futurenet. But testnet is for community members who want to try out transacting on Stellar, and thus can’t be interfered with to test out Core upgrades. Futurenet, meanwhile, is limited to testing Soroban at present.

That’s why Stellar Supercluster, a set of “missions” that perform automated tests of Stellar Core networks, exists: Stellar Supercluster can be used to validate code before it goes live on pubnet. You can see the full list of simulated environments created in the Github repository. The simulations involve running containerized core nodes in self-contained networks, then feeding them traffic to test the capacity. Stellar Supercluster replaced an old tool called Stellar Core Commander, using Kubernetes for easier orchestration.

To demonstrate how Stellar Supercluster works, let’s take a look at two related missions: MissionSimulatePubnet and MissionSimulatePubnetTier1Perf. As their titles suggest, these two missions simulate the pubnet and were used to test a new transaction broadcasting improvement called Pull Mode by SDF developer Hidenori Shinohara (more on that to come).

Building a simulated environment

When Hidenori was designing pull mode, his aim was to optimize transaction propagation in a way that improves TPS. But he couldn’t just improve this process in a way that theoretically made sense — he had to show the rest of the community that it actually improved TPS in a tangible way.

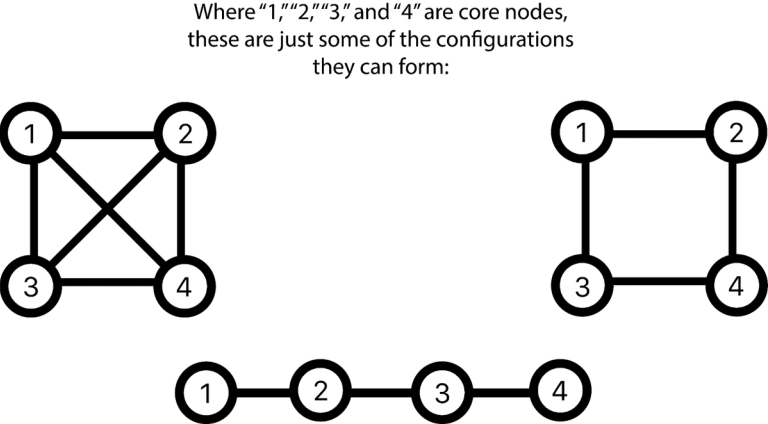

To prove this, he needed to first understand the network topology, or how various nodes are connected to each other. The Stellar Consensus Protocol (SCP) requires nodes to reach agreement with other nodes they trust — and depending on which nodes the other nodes decide to trust, there are near-infinitely many different configurations they can form.

The surveytopology command allows developers to sneak a snapshot of the network topology at a given time, and issuing this command at different times can give someone an idea of just some of the ways nodes may be connected. But this isn’t likely to be a comprehensive survey, because nodes can choose to opt-out of detection by this command, and because the network topography might look completely different within minutes of issuing the command. Additionally, while nodes are sometimes directly connected to each other in a fairly efficient configuration, other times they are misconfigured, creating excess noise in the results. To properly simulate what the impact of a new feature might be, a developer needs to test out numerous hypothetical configurations, no matter what they see after issuing the surveytopology command.

Second, Hidenori needed to understand the pace of network delays. Nodes that are geographically more distant than each other take slightly longer to transmit information. But once the location of two nodes are known, one can calculate the network delay between them by dividing the distance by the speed of light.

Last, Hidenori needed to determine the transaction rates and sizes in order to simulate the system. But this piece is easy, given Stellar’s immutability and transparency. Anyone can look at the network’s ledger history and see how large and how fast transactions are on average, and apply those calculations to a simulation of the network.

Each of these calculations were used to build the basic architecture of a simulated pubnet — AKA Stellar Supercluster missions MissionSimulatePubnet and MissionSimulatePubnetTier1Perf.

Validating blockchain code

Once the network is modeled, it then can be tested for different scenarios. In this instance, in order to test for TPS, three simulations were performed:

- One which tests something close to the current, real network,

- One which tests potential variant configurations of the network,

- And one which tests the upper limit on the amount of transactions that can be performed.

The first two simulations are fairly self-explanatory. To replicate the real network, SDF engineers ran one docker container for each node in an AWS cluster, and Kubernetes to tie them together. Then, knowing what transactions have historically looked like, engineers could issue mock transactions in the simulated network that mimicked real-world behavior. Nodes could be connected in different ways to see how various configurations impacted TPS when pull mode was implemented versus when it was not. Models were built for highly connected networks as well as those where validating nodes are not directly connected, for example. This way, just a handful of computers could be used to mimic the real network reliably enough to see how pull mode might impact TPS.

Limit testing for TPS follows a similar process. Instead of mimicking transactions seen on pubnet, engineers flooded the network with as many transactions as possible until the system broke. This demonstrates the absolute maximum number of transactions the network could issue, with and without implementing the pull mode feature.

A perfect solution?

Simulating a network this way has some obvious benefits. With a fraction of the number of machines, developers can see an approximation of how well a new feature may work in pubnet, predict factors that might make it break, and smooth out any hitches that might hamper the user experience. It’s the only responsible way to introduce new innovations that will make tangible and significant changes to the network.

There are some drawbacks, however. Because Stellar Supercluster simulations use such a small fraction of the CPU per node compared to the real network, numbers are only relative, not exact. For example, when Hidenori was testing for TPS, he could only compare whether TPS increased or decreased, rather than depend on the final TPS numbers produced by MissionSimulatePubnet and MissionSimulatePubnetTier1Perf. While he can observe the percentage increase or decrease of transactions, those percent changes had to also be taken as an approximation. And because all nodes in a simulated environment have the same computational power and run on the same hardware, there’s a level of uniformity in Stellar Supercluster simulations which does not exist in the real network.

Despite the challenges, it’s hard to imagine a better solution for testing complete features in advance of pushing them to pubnet. Stellar Supercluster missions simulate aspects of the network quickly and with a small fraction of the computer power of pubnet, or even testnet or futurenet. For community developers who want to perform capacity planning on new code or test new features, Stellar Supercluster is the best tool currently available. Technical information detailing how to make a cluster can be found on the Github repository.